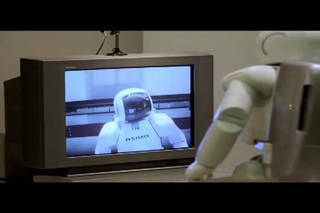

Humanoid Robot LOLA – Dynamic Multi-Contact Locomotion

This video shows our humanoid robot LOLA performing various multi-contact maneuvers. The robot is technically blind, not using any camera-based input. For these experiments the navigation module is disabled, thus the foothold positions and hand contact points are manually set according to the current environment setup. However, disturbances (pushing, uneven terrain, rolling board) are not known to the robot and are compensated by our online stabilization methods. Note that ALL algorithms run in real-time and onboard. The robot’s joints are position-controlled.

Technical Details:

Walking speed: up to 0.5 m/s = 1.8 km/h (depends on maneuver)

Runtime of walking pattern generation (once): (30ms for of motion in most complex scenario – realtime-factor ~ 600!)

Runtime of stabilization and inverse kinematics (cyclic @1kHz): 350us (mean)

For more information on LOLA please see our project’s website:

This work is supported by